Generative AI

Generative AI refers to a class of artificial intelligence models and techniques that are capable of generating new, original content such as text, images, audio, code, and other data types. These models are trained on massive datasets, allowing them to learn the underlying patterns, structures, and relationships within the data. By leveraging techniques like deep learning, neural networks, and transformer architectures, generative AI models can then create novel outputs that resemble the training data but are unique and previously unseen.

At the core of generative AI are Large Language Models (LLMs) like GPT-3, GPT-4, and DALL-E, which have demonstrated remarkable capabilities in generating human-like text, creative writing, code, and even photorealistic images from textual descriptions. These models use self-attention mechanisms and unsupervised learning to capture the context and nuances of the training data, enabling them to generate coherent and contextually relevant outputs.

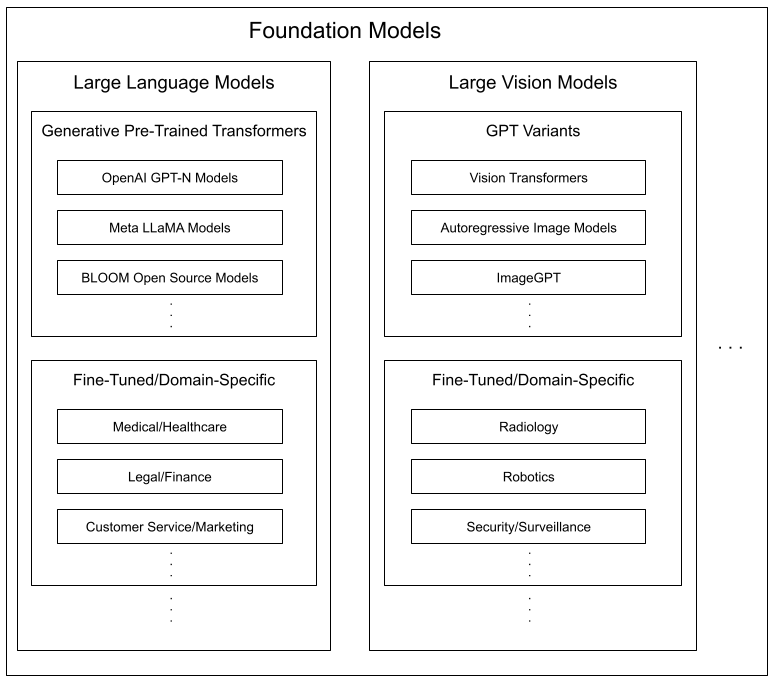

The diagram below illustrates how generative models fit into the larger context of Foundation Models:

Overall, generative AI represents a significant advancement in Artificial Intelligence, enabling machines to create original content and augment human creativity in unprecedented ways. As the technology continues to evolve, it holds the potential to revolutionize various industries and reshape the way we interact with and leverage AI systems.

Applications

Generative AI has opened up a wide range of applications across various industries

in content creation, it can assist with tasks like writing articles, stories, scripts, and marketing copy

in software development, it can generate code snippets, documentation, and even entire programs

In scientific research, generative models can aid in molecular design, drug discovery, and data augmentation

generative AI has shown promise in creative fields like art, music, and game development, enabling the generation of novel artistic works

Challenges and Limitations

Generative AI also face challenges and limitations. Their outputs can sometimes be biased, inconsistent, or factually incorrect, as they are ultimately trained on data that may contain biases or inaccuracies. There are also concerns around the potential misuse of generative AI for spreading misinformation, generating deepfakes, or infringing on intellectual property rights. As a result, ongoing research efforts are focused on improving the reliability, safety, and ethical considerations of these models, as well as exploring techniques like fine-tuning, prompt engineering, and model distillation to enhance their performance and control their outputs.